What Is F1 Score In Machine Learning

accuracy precision recall f1 - score

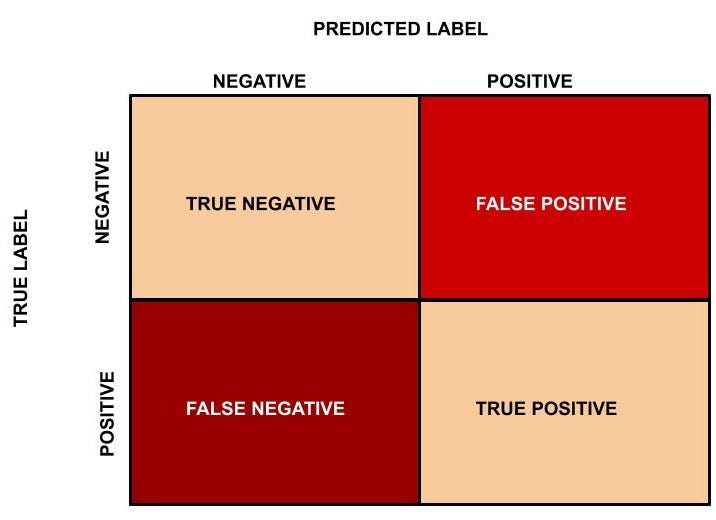

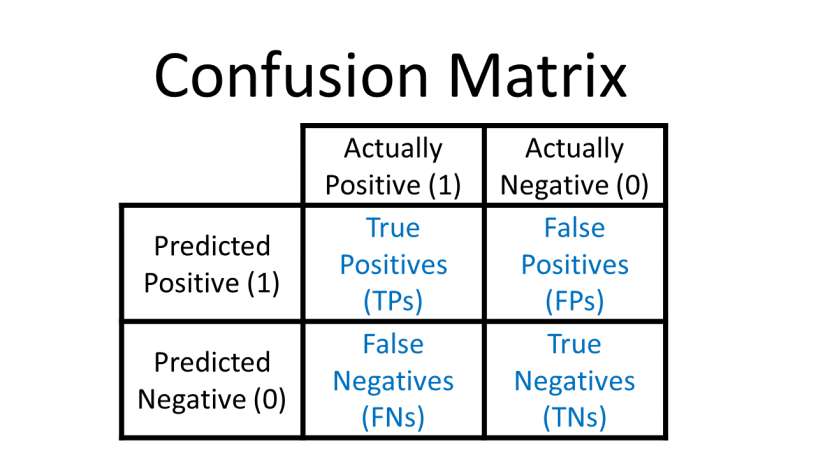

F1 Score becomes 1 only when precision and recall are both 1. F1 score becomes high only when both precision and recall are high. F1 score is the harmonic mean of precision and recall and is a better measure than accuracy. In the purchaser example F1 Score 2 0.857 0.75 0.857 0.75 0.799.The F1-score combines these three metrics into one single metric that ranges from 0 to 1 and it takes into account both Precision and Recall. The F1 score is needed when accuracy and how many of your ads are shown are important to you. We ve established that Accuracy means the percentage of positives and negatives identified correctly.F1-Score Combining Precision and Recall If we want our model to have a balanced precision and recall score we average them to get a single metric. Here comes F1 score the harmonic mean of Recall TP TP FN. F1 score - F1 Score is the weighted average of Precision and Recall. Therefore this score takes both false positives and false negatives into account. Intuitively it is not as easy to understand as accuracy but F1 is usually more useful than accuracy especially if you have an uneven class distribution.F1 score is the harmonic mean of precision and recall and is a better measure than accuracy. In the pregnancy example F1 Score 2 0.857 0.75 0.857 0.75 0.799. Reading List

Formula for F1 Score. We consider the harmonic mean over the arithmetic mean since we want a low Recall or Precision to produce a low F1 Score. In our previous case where we had a recall of 100 and a precision of 20 the arithmetic mean would be 60 while the Harmonic mean would be 33.33 .Mathematically it can be represented as a harmonic mean of precision and recall score. F1 Score 2 Precision Score Recall Score Precision Score Recall Score The accuracy score from the above confusion matrix will come out to be the following F1 score 2 0.972 0.972 0.972 0.972 1.89 1.944 0.972F1 score - F1 Score is the weighted average of Precision and Recall. Therefore this score takes both false positives and false negatives into account. Intuitively it is not as easy to understand as accuracy but F1 is usually more useful than accuracy especially if you have an uneven class distribution.F1 is an overall measure of a model s accuracy that combines precision and recall. F1 2 precision recall precision recall High F1 score means that you have low false positives and I made a huge mistake. I printed output of scikit-learn svm accuracy as str metrics.classification report trainExpected trainPredict digits 6 Now I need to calculate accuracy from following output precision recall f1-score support 1 0.000000 0.000000 0.000000 1259 2 0.500397 1.000000 0.667019 1261 avg total 0.250397 0.500397 0.333774

-- Accuracy Precision Recall F1 score. Accuracy Accurcy . Precision Recall F1-Score Accuracy. When it predicts yes the person likes dogs how often is it actually correct When it is actually yes the person likes dogs how often does it predict correctly F1 Score. Now if you read a lot of other literature on Precision and Recall you cannot avoid the other measure F1 which is a function of Precision and Recall. Looking at Wikipedia the formula is as follows Sometimes accuracy alone is not a good idea to use as an evaluation measure. This is the reason why we use precision and recall in consideration. To have a combined effect of precision and recall we use the F1 score. The F1 score is the harmonic mean of precision and recall. F1 score 2 1 Precision 1 Recall .This gives us global precision and recall scores that we can then use to compute a global F1 score. This F1 score is known as the macro-average F1 score. Our average precision over all classes is

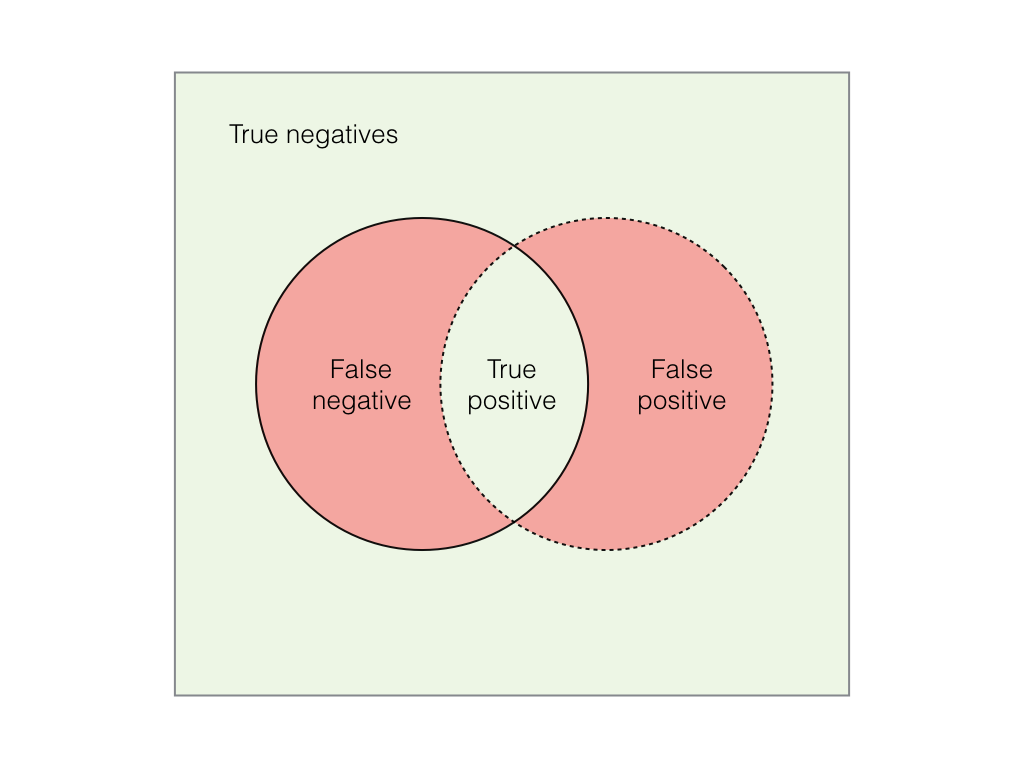

F1-score when precision 1.0 and recall varies from 0.01 to 1.0. Image by Author. This is to say regardless of which one is higher or lower the overall F1-score is impacted in the exact same way which seems quite obvious in the formula but easy to forget . F1-score when Precision 0.8 and Recall 0.01 to 1.0The F1 score is a blend of the precision and recall of the model which makes it a bit harder to interpret. As a rule of thumb We often use accuracy when the classes are balanced and there is no major downside to predicting false negatives.Recall is 0.2 pretty bad and precision is 1.0 perfect but accuracy clocking in at 0.999 isn t reflecting how badly the model did at catching those dog pictures F1 score equal to 0.33 is capturing the poor balance between recall and precision.enter image description hereHow can I print precision accuracy recall and f1-score values side by side as shown in the image for name model in models model model.fit X train Y train Y preF1 Score. It is termed as a harmonic mean of Precision and Recall and it can give us better metrics of incorrectly classified classes than the Accuracy Metric. It can be a better measure to use if we need to seek a balance between Precision and Recall. Also if there is a class imbalance a large number of Actual Negatives and lesser Actual

Explain the difference between precision and recall explain what an F1 Score is how important is accuracy to a classification model It s easy to get confused and mix these terms up with one another so I thought it d be a good idea to break each one down and examine why they re important.As shown in Table 2 we analyzed the performance of CNN and DNN models from accuracy recall F1 score and test accuracy. In most indicators of all categories the evaluation indicators of the MFDNN model are higher than other models. In terms of test accuracy the MFDNN model is 3.33 higher than the DNN model.Accuracy 990 989 010 1 000 000 0.99 99 . 3.4. F1 Score. The F1 Score is the weighted average or harmonic mean of Precision and Recall. Therefore this score takes both False Positives and False Negatives into account to strike a balance between precision and Recall.Additionally we use accuracy recall precision F1-score and runtime as evaluation metrics. For detection performance the detection accuracy of our proposed model is 95.11 for UNSW-NB15 and 99.92 for CICIDS2017 which is better than most state-of-the-art intrusion detection methods.Secondly precision recall F1 score and PR curve Precision Recall are also investigated with respect to confidence score. The precision curve is illustrated in Fig. 15 a.

Accuracy Recall Precision F1 Score in Python. Precision and recall are two crucial yet misjudged topics in machine learning. It often pops up on lists of common interview questions for data science positions. Describe the difference between precision and recall explain what an F1 Score is how important is accuracy to a classification model F1 Score The F1 score measures the accuracy of the models performance on the input dataset. It is the harmonic mean of precision and recall. All these measures must be as high as possible which indicates better model accuracy. This recipe gives an example on how to calculate precision recall and F1 score in R. Step 1 - Define two vectorsF1 2 TP 2 TP FP FN ACCURACY precision recall F1 score We want to pay special attention to accuracy precision recall and the F1 score. Accuracy is a performance metric that is very intuitive it is simply the ratio of all correctly predicted cases whether positive or negative and all cases in the data.

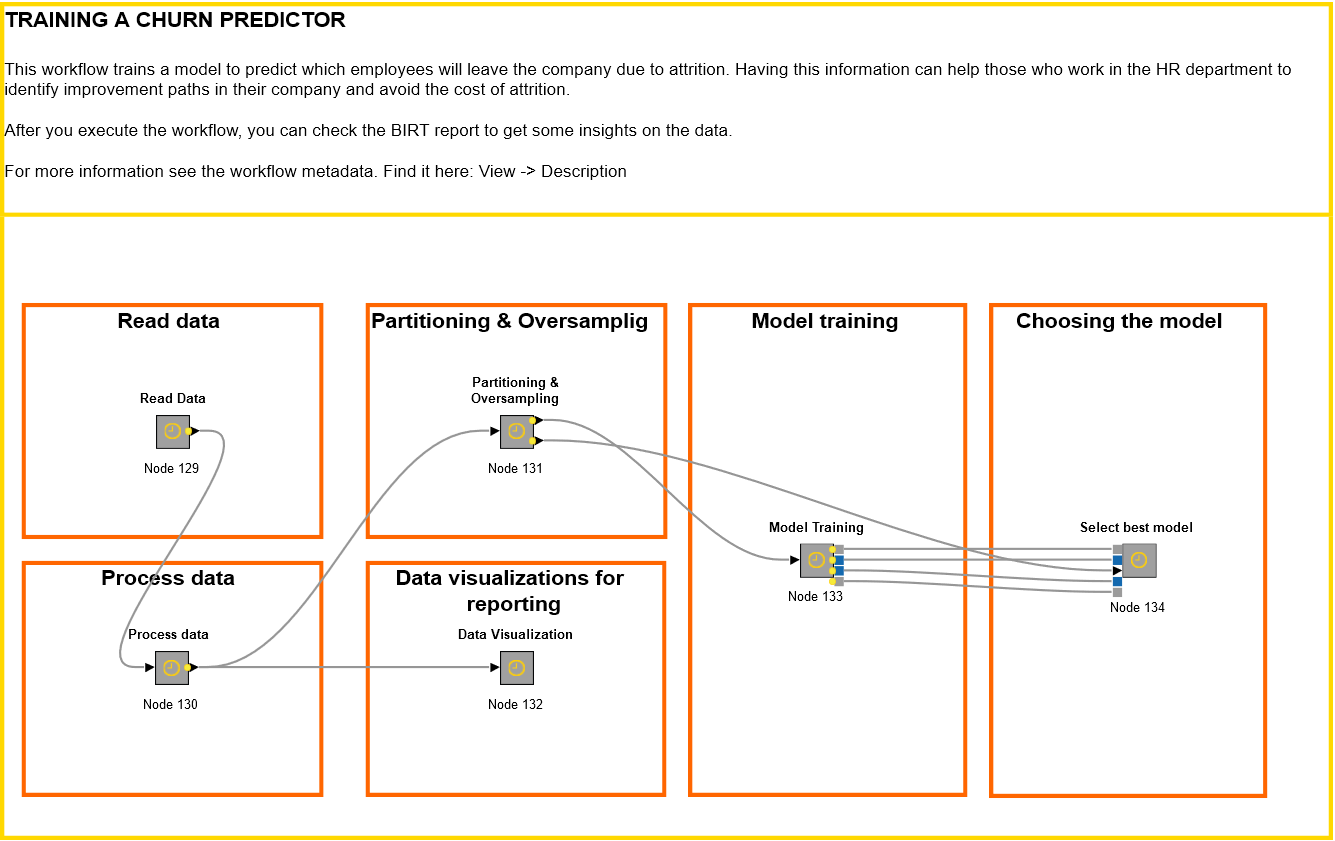

Tutorial Machine Learning Dataiku

Confusion Matrix Accuracy Precision Recall F1 Score

Customer Retention Analysis Churn Prediction - Case

Classification Problems Performance Measurement Nick

Explaining precision and recall Andreas Klintberg Medium

Predicting Employee Attrition with Machine Learning KNIME

Comparing models in Azure Machine Learning