F1 Score Vs Accuracy

4 things you need to know about AI accuracy precision recall and F1

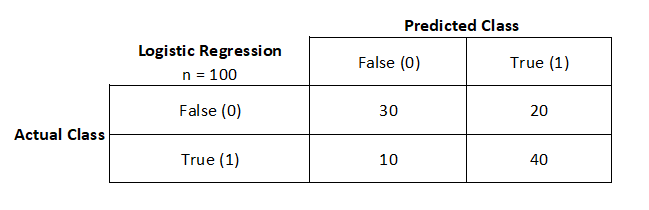

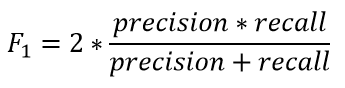

Accuracy 990 989 010 1 000 000 0.99 99 . 3.4. F1 Score. The F1 Score is the weighted average or harmonic mean of Precision and Recall. Therefore this score takes both False Positives and False Negatives into account to strike a balance between precision and Recall.This simplified formula using only the stats we have in the confusion matrix shows us that F Score is a comparison of true positives to the total number of positives both predicted and actual. Our example F Score would be 2 8 2 8 2 1 16 19 or 84 . Better than recall but worse than precision. As expected.FN False Negatives. The highest possible F1 score is a 1.0 which would mean that you have perfect precision and recall while the lowest F1 score is 0 which means that the value for either recall or precision is zero. Now that we know all about precision recall and the F1 score we can look at some business applications and the role of these Accuracy Recall Precision and F1 Scores are metrics that are used to evaluate the performance of a model. Although the terms might sound complex their underlying concepts are pretty straightforward. This tells us that our model is not performing well on spam emails and we need to improve it. Without Sklearn recall TP TP FN print However there is a simpler statistic that takes both precision and recall into consideration and you can seek to maximize this number to improve your model. The F1-score is a statistic that is essentially the harmonic mean of precision and recall. The formula of the F1 score depends completely upon precision and recall. The formula is-

Accuracy Specificity Precision Recall and F1 Score for Model Selection A simple review of Term Frequency - Inverse Document Frequency A review of MNIST Dataset and its variations Everything you need to know about Reinforcement Learning The statistical analysis t-test explained for beginners and experts Types of AutoencodersRecall and precision come to the rescue in this case. Utilizing precision recall F1 score and a confusion matrix we can design efficient data evaluation models. 5. Importance of F1 score. F1 score is related to recall and precision. It is required for establishing a balance between recall and precision. The formula for the F1 score is as Asking about the system s precision recall and F1 scores and how long and with how much and what type of data was required to achieve those figures are better questions to ask. However accuracy vs. precision vs. recall vs. F1 scores are a detailed subject - see here for our 101.Confusion Matrix Precision Recall F score Accuracy Confusion Matrix is no more Confusing. Consider a dataset has two classes say Class A and B. There may be two cases where your dataset is Accuracy Precision Recall F1 score Precision Positive Positve Recall Positive Positive AI

Recall. So let us apply the same logic for Recall. Recall how Recall is calculated. True Positive False Negative Actual Positive. There you go So Recall actually calculates how many of the Actual Positives our model capture through labeling it as Positive True Positive . Applying the same understanding we know that Recall shall be the Putting the figures for the precision and recall into the formula for the F-score we obtain Note that the F-score of 0.55 lies between the recall and precision values 0.43 and 0.75 . This illustrates how the F-score can be a convenient way of averaging the precision and recall in order to condense them into a single number.You know the model is predicting at about an 86 accuracy because the predictions on your training test said so. But 86 is not a good enough accuracy metric. With it you only uncover half the story. Sometimes it may give you the wrong impression altogether. Precision recall and a confusion matrix now that s safer. Let s take a look.The traditional F measure is calculated as follows F-Measure 2 Precision Recall Precision Recall This is the harmonic mean of the two fractions. This is sometimes called the F-Score or the F1-Score and might be the most common metric used on imbalanced classification problems.Precision calculates the accuracy of the True Positive. Precision TP TP FP. F1-score. F1-score keeps the balance between precision and recall. It s often used when class distribution is uneven but it can also be defined as a statistical measure of the accuracy of an individual test. F1 2 precision recall precision recall

Especially interesting is the experiment BIN-98 which has F1 score of 0.45 and ROC AUC of 0.92. The reason for it is that the threshold of 0.5 is a really bad choice for a model that is not yet trained only 10 trees . You could get a F1 score of 0.63 if you set it at 0.24 as presented below F1 score by threshold.This formula can also be equivalently written as Notice that F1-score takes both precision and recall into account which also means it accounts for both FPs and FNs. The higher the precision and recall the higher the F1-score. F1-score ranges between 0 and 1. The closer it is to 1 the better the model. 6.The precision is the proportion of relevant results in the list of all returned search results. The recall is the ratio of the relevant results returned by the search engine to the total number of the relevant results that could have been returned. In our case of predicting if a loan would default It would be better to have a high Recall as Similarly in Machine Learning we have performance metrics to check how well our model has performed. We have various performance metrics such as Confusion Matrix Precision Recall F1 Score Answer In order to answer this question I would like to take one simple example. A school is running a machine learning primary diabetes scan on all of its students. The output is either diabetic ve or healthy -ve . There are only 4 cases any student X could end up with. We ll be using th

Answer 1 of 2 Accuracy precision and recall are evaluation metrics for machine learning deep learning models. Accuracy indicates among all the test datasets for example how many of them are captured correctly by the model comparing to their actual value. However consider a binary imbalaThis is done by mainly two things Prediction Reality . Evaluation is done by -. First search for some testing data with the resulted outcome that is 100 true. Then you will feed that testing data to the AI modal while you have the correct outcome with yourself that is termed as Reality . Then when you will get the Accuracy Precision Recall F1 score 4 Things You Need to Know about AI Accuracy Precision Recall and F1 scores Multi-Class Metrics Made Simple Part I Precision and Recall Accuracy Precision and Recall Multi-class Performance Metrics for Supervised Learning 0.F1 score provides a balance between Recall and Precision. A classification model is considered ideal when F1 score 1 and a complete failure when F1 score 0 . Basically a high value of F1 score is equal to high values of both Recall and Precision meaning that we are minimizing the False predictions of our model FN and FP .Society of Data Scientists January 5 2017 at 8 24 am . It is helpful to know that the F1 F Score is a measure of how accurate a model is by using Precision and Recall following the formula of F1 Score 2 Precision Recall Precision Recall Precision is commonly called positive predictive value.

If you want to use a metric function sklearn.metrics you will need to convert predictions and labels to numpy arrays with to np True. Also scikit-learn metrics adopt the convention y true y preds which is the opposite from us so you will need to pass invert arg True to make AccumMetric do the inversion for you.The confusion matrix gives you a lot of information but sometimes you may prefer a more concise metric. TP is the number of true positives and FP is the number of false positives. A trivial way to have perfect precision is to make one single positive prediction and ensure it is correct precision 1 1 100 .Accuracy precision recall F1-score You will need to find the baseline accuracy for your dataset by calculating the percentage of positive or negative labels whichever is higher . A good classifier should result in an accuracy that is much higher than a baseline accuracy. The tasks in this activity are i Build a neural network So accuracy f1 score precision recall I call them the famous for using all kinds of models and external evaluation metrics used to evaluate the final prediction of an ml model. But again at the AI 900 this is all we need to know. But yeah there you go. Hey this is Andrew Brown from exam Pro.

Classification Report Precision Recall F1 - Score Accuracy by Kenny

Precision Recall F1 score True Positive Deep Learning Tutorial 19

Performance metrics in Classification and Regression

What s the F1 score How would you use it - Quora

What is F1 - score and what is it s importance in Machine learning

classification - Balanced Accuracy vs . F1 Score - Data Science Stack

F1 Score vs ROC AUC vs Accuracy vs PR AUC Which Evaluation Metric

Accuracy and F1 score versus Iterations Download Scientific Diagram

4 things you need to know about AI accuracy precision recall and F1

F1 Score Range

Confusion Matrix Accuracy Precision Recall F1 Score by